Cloud Dev Environments

I love me a good dev environment. There's nothing that feels more comfortable than having your laptop set up just the way you like it. There was a senior engineer at Amazon that I really looked up to. He had this slick Bash script that he would run any time he'd get a new laptop and it would install specific versions of his preferred tools as well as create working directories for his most common tasks. I was in awe of the genius of the idea and I immediately copied it for my own use.

The first time I used a cloud-based IDE, I was really expecting to have that same sense of wonderment, but it never quite happened. Yeah, I had a 'dev box' that was running on an EC2 instance that I could theoretically terminate and grab a new one and be up and running again in however long it took to provision a new EC2 Instance. I should have been happy. But now the contents of my setup script were mostly super janky Bash commands to try to infer what version of Amazon Linux was being used, or which type of NVME storage the underlying instance was using.

The promise I was chasing was that I could use my 'regular' instance most days and if I needed a GPU or a large amount of additional storage, I could easily move my environment to a new instance when I started work for the day.

Without fail, every time I tried this, I'd spend until lunch debugging why the torch.device_count() command was showing 0.

I had fooled myself into thinking this was a problem I could solve with clever Bash scripting and diligence about what tools warranted a place in my beloved setup script.

I was slowly realizing that I needed something more than just a cloud-based IDE, but I still wasn't sure what that was.

Fast forward a few years and I had nearly resigned myself to fighting with Lerna dependencies every time I wanted to make a contribution to the AWS Cloud Developer Kit (CDK).

Without fail, everytime I would fork the repo to add a new construct, I'd spend an hour wrestling with my system's Node version.

One day I noticed there was a button on the repo's readme labeled open with Gitpod and ever since then I have measured my life by that day.

I started working at Amazon three years and seven months BG (Before Gitpod).

My son was born on four years and one month AG (After Gitpod).

Look at this commit that added Gitpod support.

image: jsii/superchain

tasks:

- init: yarn build --skip-test --no-bail

vscode:

extensions:

- [email protected]:9Wg0Glx/TwD8ElFBg+FKcQ==

Six lines. Six lines is all that was required to ensure that everybody who wanted to contribute to the AWS CDK would never spend more than a few seconds setting up their environment. This was the one-click setup I had been looking for and they even had a name for it. Cloud Dev Environments.

It felt so obvious after I started using it.

I didn't need just 'a laptop in the cloud'.

I needed the entire dev environment and here was a product that made offering that one-click setup so easy it fits in a tweet.

I could even keep using my clever bash commands in the tasks.init line.

What's not to love?

My main complaint was that it was a single Docker image.

What I really wanted was the ability to create an ephemeral SQL database that wasn't running in the same space as my app, seed it with sample data, set up the connection to it from my local dev environment, and kick off Jupyter locally all in one easy-to-package bundle.

In early October, Gitpod launched this new feature called 'Gitpod Flex' that introduced a bunch of new features to really bridge the gap between well configured Docker container in the cloud and true 'cloud dev environment'. The good people at Gitpod asked me to give it another shot and see if this new launch hit the mark and to write about my experience.

Let's walk through a quick demo app I created using the new devcontainer-based setup and see how it goes.

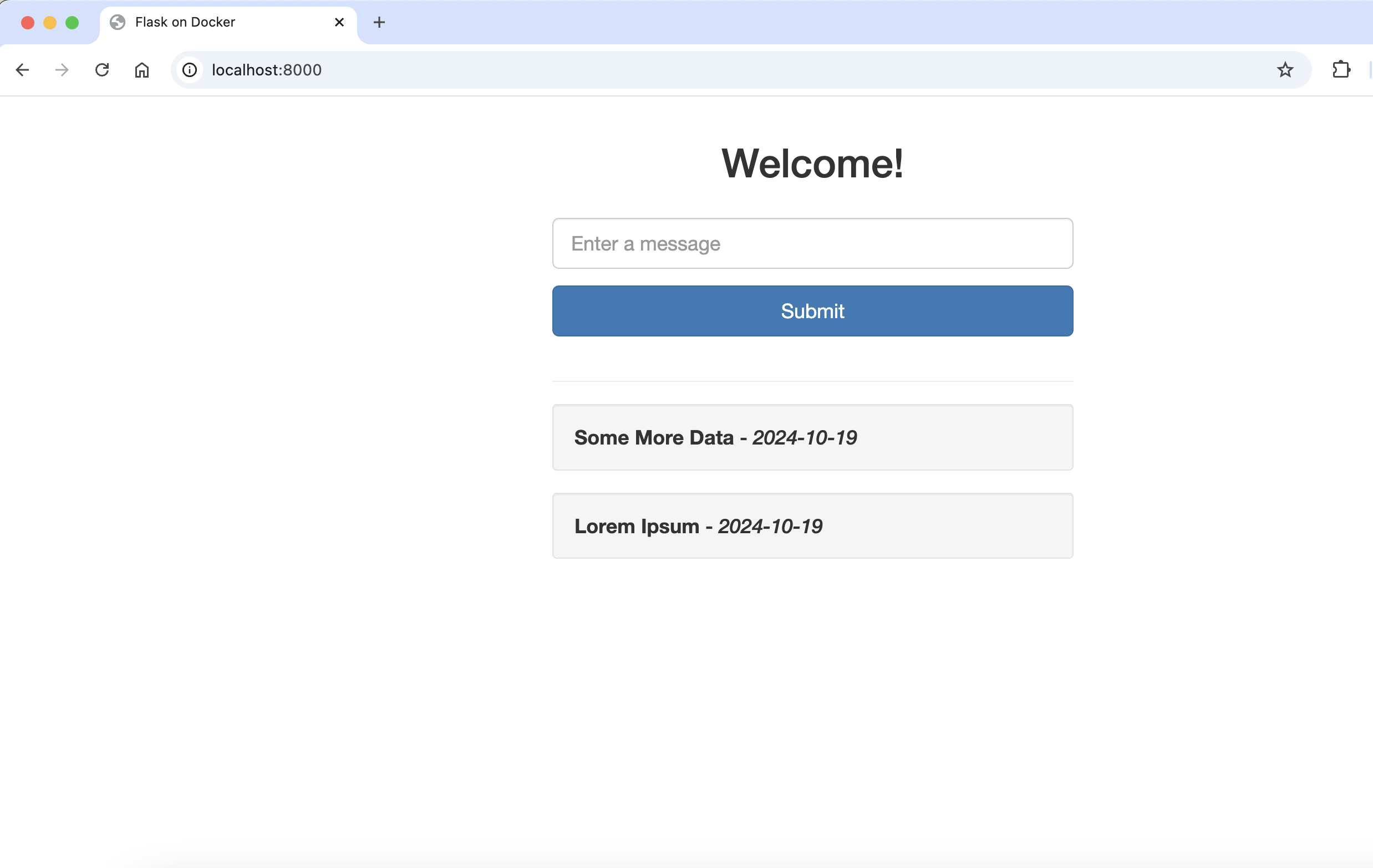

I created a sample app that uses two containers.

One container is a super basic postgres database and the other container is the Python Flask-based web application.

I add a new file to the .gitpod/ directory called automations.yaml.

# defines automation tasks (optional)

tasks:

populateDB:

# Name is a required, human readable string.

name: Add test data to the database

description: makes POST requests to the web server that populate the database with data

triggeredBy:

- manual

command: |

curl 'http://localhost:8000/submit' \

-H 'Accept: text/html' \

-H 'Accept-Language: en-US,en;q=0.9' \

-H 'Cache-Control: no-cache' \

-H 'Content-Type: application/x-www-form-urlencoded' \

--data-raw 'text=Lorem+Ipsum'

curl 'http://localhost:8000/submit' \

-H 'Accept: text/html' \

-H 'Accept-Language: en-US,en;q=0.9' \

-H 'Cache-Control: no-cache' \

-H 'Content-Type: application/x-www-form-urlencoded' \

--data-raw 'text=Some+More+Data'

This automation will push two records into the database by simulating a request to the web application.

I left the trigger as manual so that I can do some inspecting of the setup before deciding if I want to populate the database or not.

Note: PLEASE don't use this in production, "postgres" is a terrible password and shouldn't included in your source code.

Usually, you would use something like Hashicorp Vault to store/retrieve these usernames and passwords.

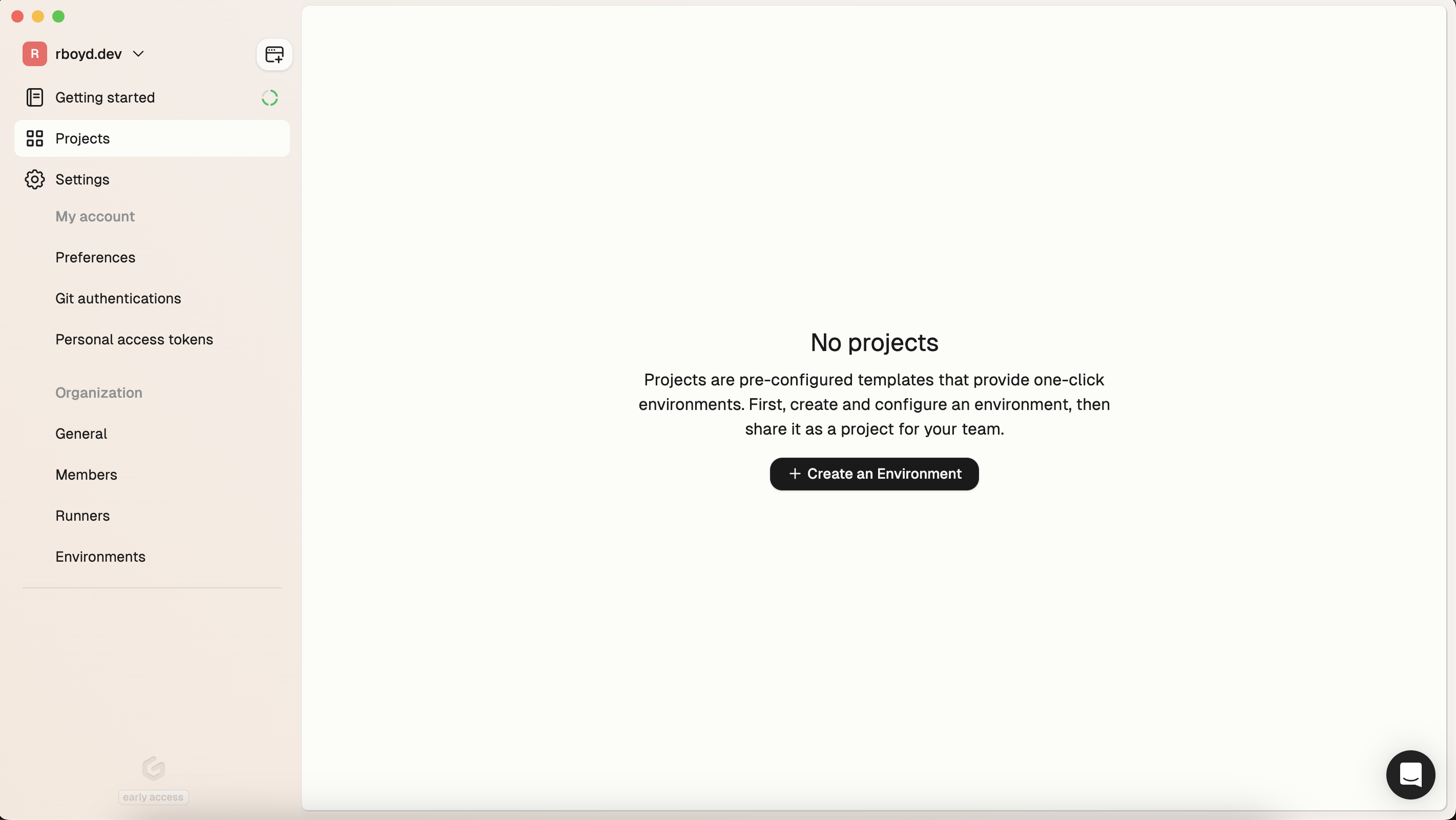

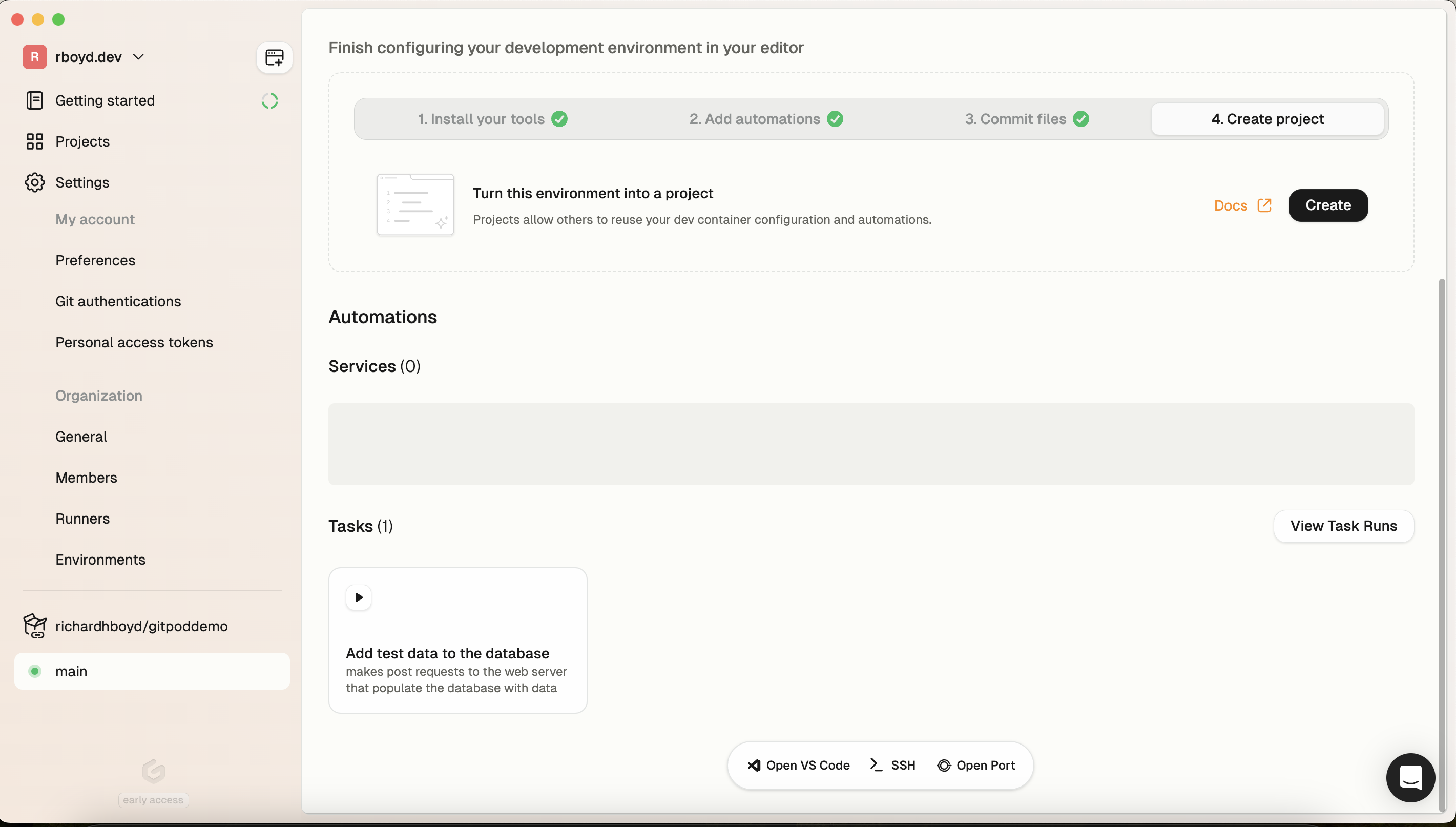

I open up Gitpod Desktop

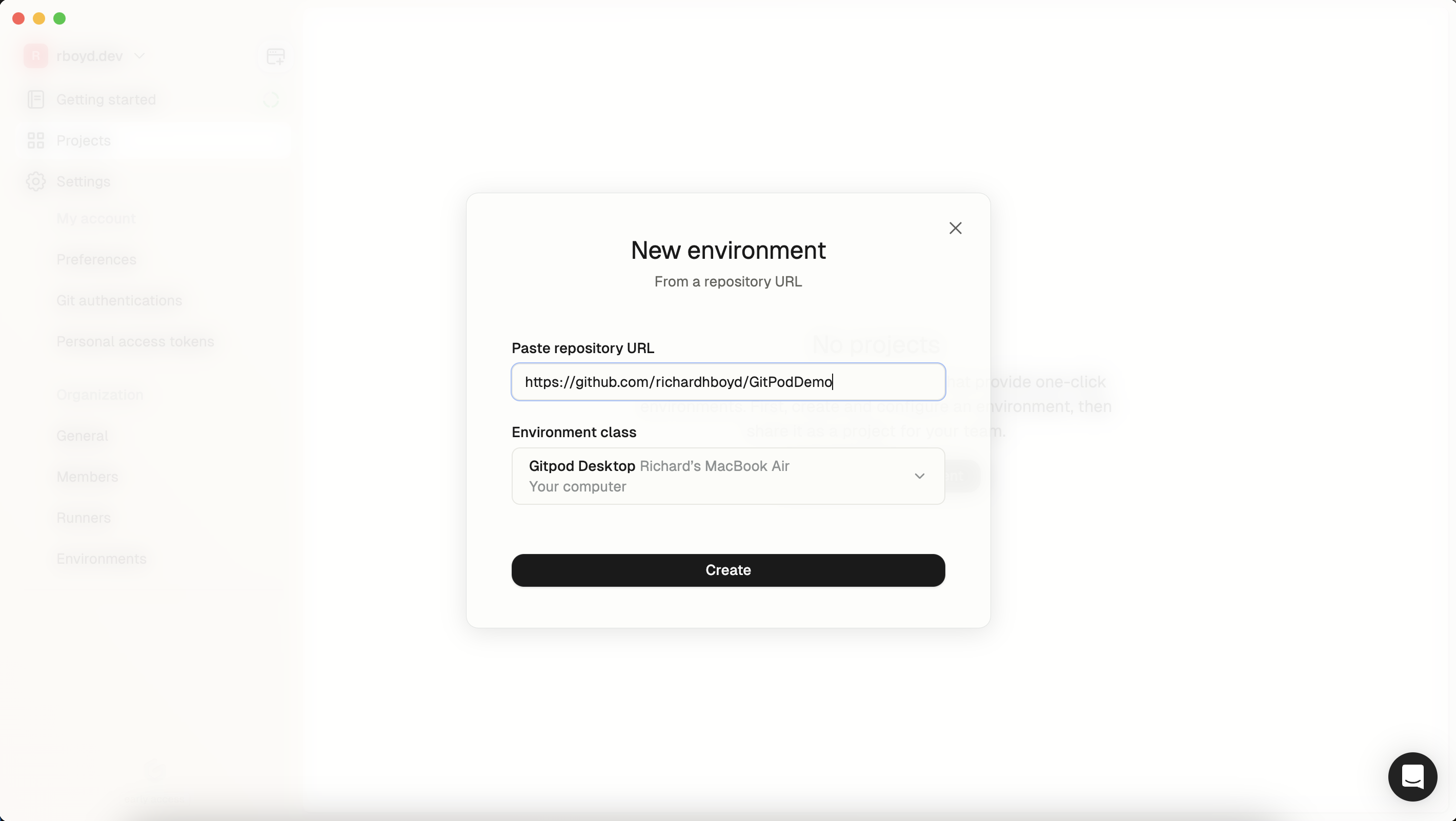

I tell Gitpod to use my sample repo as the source for the environment.

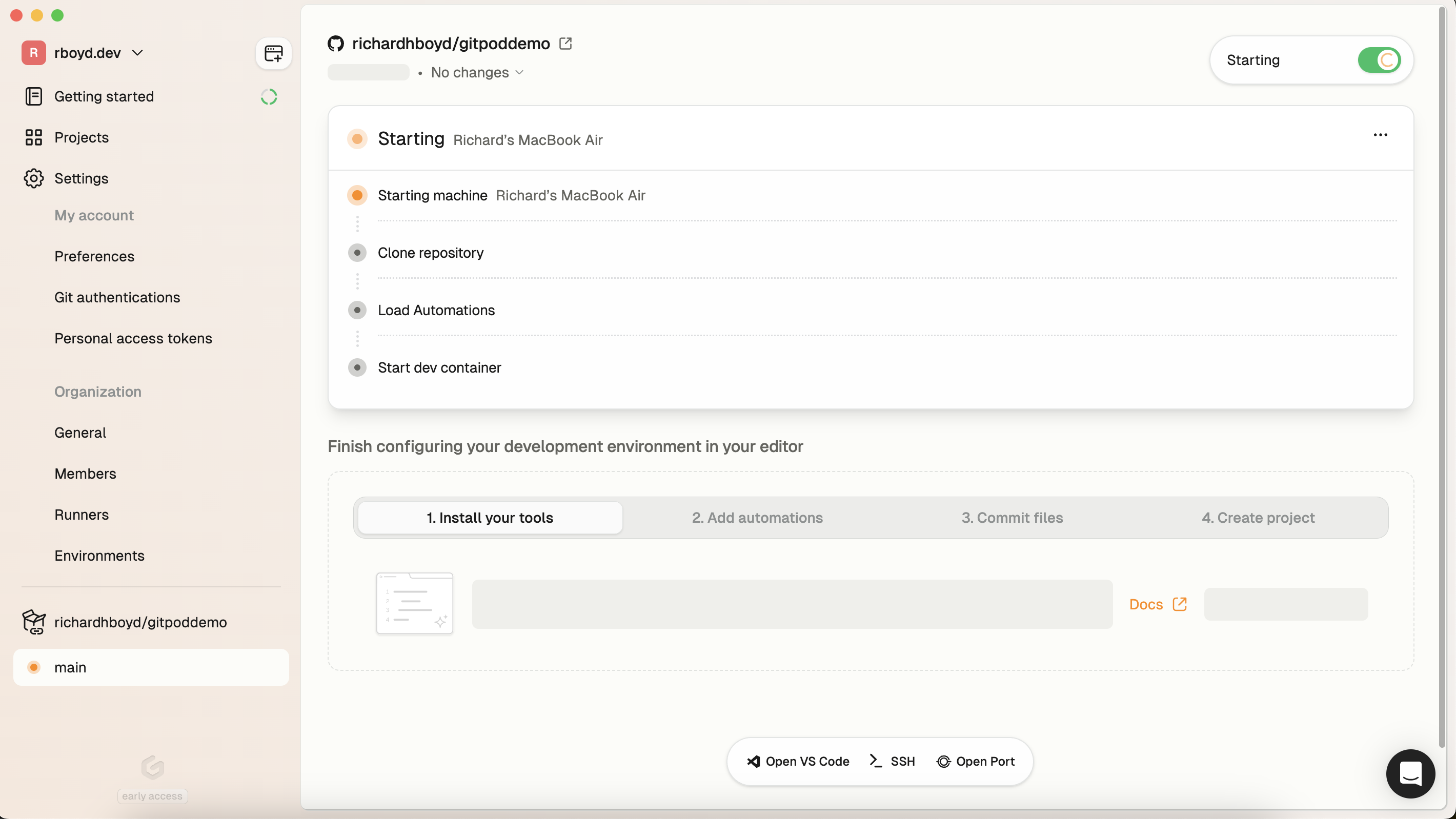

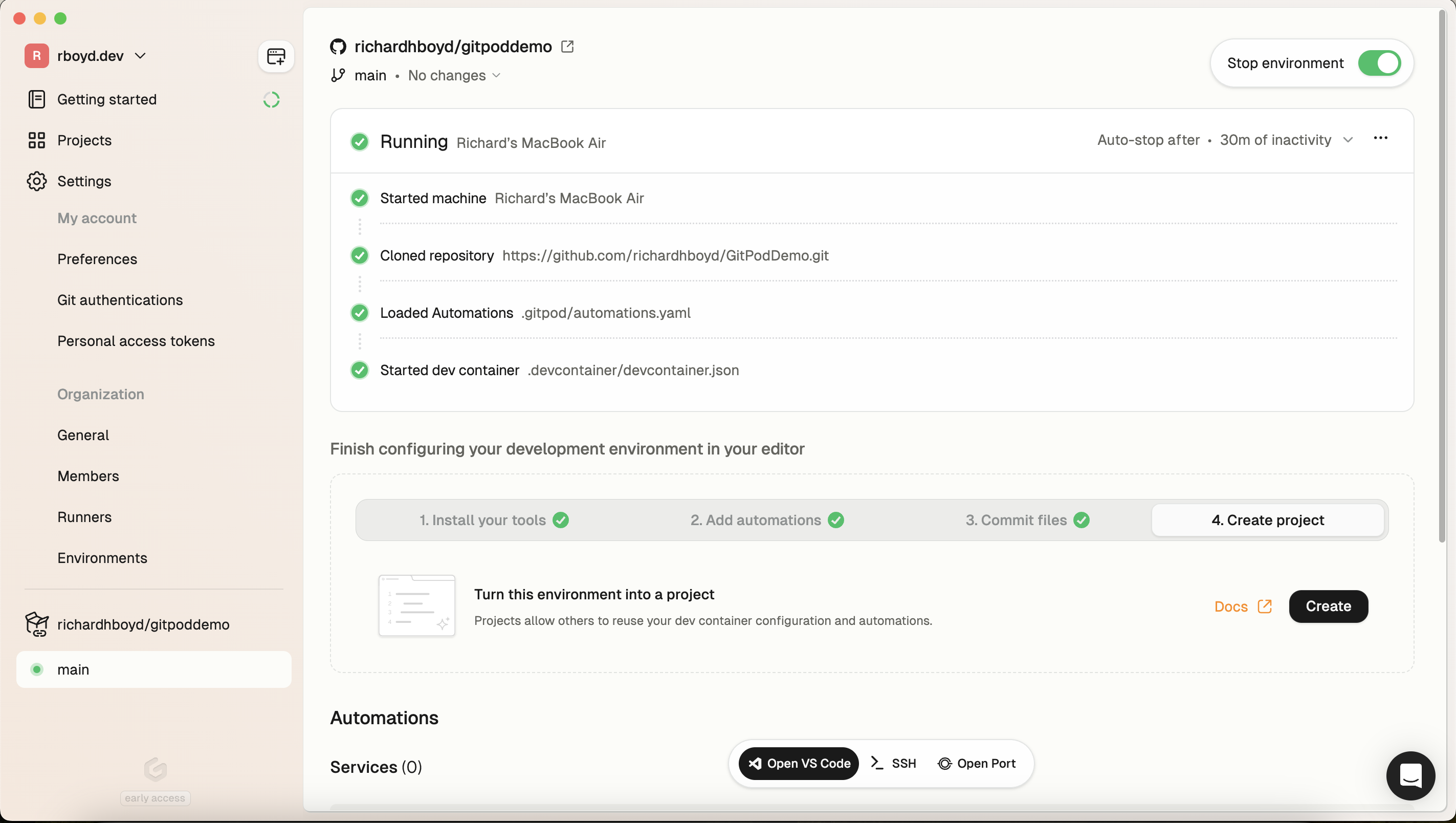

Environment starts in about 2 min.

Once the environment is started, I can open VSCode locally

Or I can view the Automation tasks

Each 'manual' task appears as a button that can be triggered as needed

Finally, I can open a browser window and see my sample app up and running.

I'd love to see what you build with Gitpod. Feel free to Tweet at me with what you come up with.